The mind bending arc of the AI universe

I spent 2 weeks reading the history of AI and now I'm an expert

I’m a history geek— I have a sweatshirt from the History of Byzantium podcast—so I figured the best way to begin my AI exploration was to diving into its historical arc and how we arrived at today’s inflection point.

The History

Humans have long dreamed of turning lifeless matter into sentient beings (see: Frankenstein and even older myths), but AI as we know it—programming machines to “reason”—took shape in the 1950s. That’s older than I expected.

From the start, computing and AI evolved side-by-side.

There are deeper dives elsewhere, so I’ll keep this to major eras and inflection points.

1950s–1960s: Started from the bottom...

Theoretical foundations & early attempts

Turing Test (1950): Alan Turing proposed a benchmark for machine intelligence—if a machine could hold a conversation indistinguishable from a human’s, it could be considered intelligent. It remains both a concept and a challenge.

Dartmouth group (1956): The birth of AI as a field. John McCarthy and Marvin Minsky declared that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

Moore’s Law (1965): Gordon Moore observed that computing power doubles roughly every two years—a foundational truth that enabled AI to scale with time.

1970s–mid 1990s: Short springs, long winters

Overpromises, high costs, and reality checks

Language translation efforts (1960s): Governments funded early projects to translate Russian to English. These systems relied on direct substitutions and failed to handle nuance or grammar.

AI Winter #1: After dismal results, funding dried up. The failure showed that real meaning goes beyond syntax.

Microworlds (1970s): MIT researchers like Seymour Papert created constrained environments like “Blocks World” where AI could operate—until exposed to real-world complexity.

AI Winter #2: These narrow systems crumbled outside demos, triggering more disappointment.

Expert Systems (1980s): Rule-based programs like MYCIN tried to encode domain expertise. It was slow, laborious work.

AI Winter #3: Scaling expert systems proved near-impossible. Adding experts didn’t help—it often introduced contradictions.

1990s–2010s: The (technical) building blocks fall into place

1997 – Deep Blue beats Kasparov: A symbolic win. It didn’t “think” like a human, but showed computational power could outperform us in specific domains.

The rise of the web: Digital content and behavioral data exploded—gold for training algorithms.

Data abundance: Large labeled datasets allowed algorithms to learn rather than rely on static rules.

Cloud computing: Democratized access to compute—crucial for experimentation and training.

Algorithmic breakthroughs: Models like support vector machines, decision trees, and eventually deep neural networks outpaced rule-based systems.

2015 – AlphaGo defeats Lee Sedol: A stunning moment. Deep learning and reinforcement learning combined to master a task once thought uniquely human.

2017–2022: Pay attention. Everything is transforming.

2017 – Transformers arrive: Attention is All You Need introduced the transformer architecture—unlocking the ability to model long-range context and parallel computation.

“Attention is All You Need” paper: Link here

Why it mattered: Transformers enabled leaps in translation, summarization, and text generation.

2022–Today: ...Now we here.

2022 – ChatGPT launches: OpenAI introduced ChatGPT, trained on a massive corpus with transformer architecture. It could generate natural text, answer questions, write code, and more.

Generative AI explosion: It became the fastest-growing consumer technology—spanning search, design, education, and software. Open-source tooling and startups flooded in.

Multimodal AI emerges: Systems started integrating text, images, audio, and video—widening AI’s reach.

Scale + fine-tuning: Foundation models now serve as a base layer. Innovation has shifted to fine-tuning, data quality, and application-layer differentiation.

What did I take away from all this?

Conviction and infrastructure make imagination reality

AI’s 75-year journey surprised me. The Dartmouth group saw today’s building blocks clearly.

As humans, we’re great at building on our existing knowledge base in order to imagine what could exist—even if it’s not yet possible. Think Da Vinci’s designs for flying machines in the 15th century.

But imagination alone isn’t enough. The gap between concept and reality depends on infrastructure catching up. The AI winters remind us this isn’t a linear path.

Imagination can fade, shaped by fleeting interest and day-to-day survival. Infrastructure work is often thankless. That’s where conviction comes in—carrying the torch through winters, until mainstream interest returns.

When does the bleeding edge move from academia to industry?

The cutting edge of AI research follows a well-trodden path—from academia to industry.

For decades, universities carried the AI torch. Look back in my timeline - Dartmouth, MIT, Alan Turing was at the University of Manchester when we came up with his test. Only Gordon Moore was an early exception, he was at Fairchild Semiconductors when he coined his “Law.”

Then came 1997 and Deep Blue. Gradually, the private sector took the reins. Google’s transformer breakthrough in 2017 sealed the shift.

Academia still matters deeply—but ask yourself: how often do top AI minds move from industry back to academia? Almost never. The reverse? All the time.

So if you’re investing in AI founders today, look for people who were tinkering with these ideas in academia 10 years ago. Their expertise matters—but so does their conviction.

The technology “moat” can dissipate very quickly

Transformers came from Google. DeepMind’s (a Google subsidiary) AlphaGo defeat of Go grandmaster Lee Seedol also represented a stepchange in neural networks. Yet today, Google isn’t the undisputed AI leader—it’s one of several.

Did Google drop the ball? Kind of—but that’s the norm. IBM did it before them. Big technological breakthroughs generally don't translate into enduring dominance.

Why? Secrets don’t stay secret. Competitors catch up or innovate around the moat. It’s a good reminder: in tech, first isn’t always best.

Is non-consensus creativity humanity’s last redoubt?

AI is built on human data, history, and math. It learns patterns. But creation—the act of producing something original—still feels uniquely human.

AI tends to reflect consensus. It generates, but does it create?

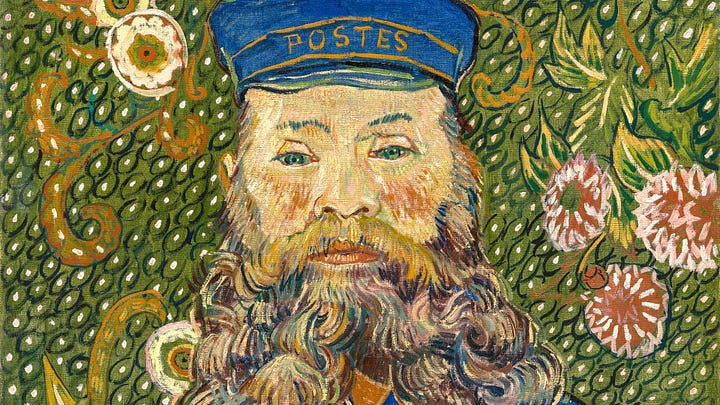

Last week, my wife and I visited the MFA’s Van Gogh exhibit. It featured works by Dutch painters Van Gogh cited as inspiration. Yet his own paintings look nothing like theirs.

It made me wonder: if you trained an AI on all Van Gogh’s influences, would it paint The Postman? Probably not.

Even if you included every detail of his life—childhood, mental illness, relationships, letters—could you prompt a model to make a Van Gogh? I doubt it.

Why? Because AI optimizes for consensus. Genius usually breaks from it.

That’s what I’m still chewing on. Can AI ever produce something of original, creative genius?

I don’t mean just a painting—I mean a contrarian take on a historical event, a novel investment thesis, or even a blog post title.

ChatGPT’s answer to this question:

“A calculator can beat any human at arithmetic but isn’t called a ‘math genius.’ Similarly, AI might generate beautiful outputs, but that doesn’t make it a ‘creative genius’—yet.”

But I’m more interested in your (human) thoughts..

— Hani

Sources:

https://glasswing.vc/blog/research/the-history-of-artificial-intelligence/

https://developer.nvidia.com/blog/deep-learning-nutshell-core-concepts/

OpenAI. 2024. ChatGPT GPT-4-turbo. https://chatgpt.com/

https://www.mfa.org/exhibition/van-gogh-the-roulin-family-portraits